Documentation

[Recap] TiM Seminar 2022-23 “Social Imaginaries of Ethics and AI” – Sonja Rebecca Rattay and Irina Shklovski (Copenhagen University) and Marco Rozendaal (TuDelft)

by Jakob Henselmans

1. Note on the online environment

Everybody should join the MIRO-board. There will be an interactive session at the end.

2.Very short introduction by Maaike Bleeker.

3. Sonja Rebecca Rattay – introduction to research:

Design practices, ethics and praxis; how social imaginaries provide frames for how AI’s are understood? What do we mean when we say AI? It’s become a buzzword. Dictionary: “the ability of a computer to perform tasks commonly associated with intelligent beings”. What does intelligence mean here? Since AI refers to a few different things, what am I referring to?

Could be Alexa (voice-assistant), or full-on robots with neuro-network like systems. But it also captures the ideas of what technologies and smart technologies might be in the future. If you ask Google what ‘AI’ is, you get an image of an ‘electronized brain’. AI has become a canvas for the projection of technology, not merely in scholarship, but in daily life too, so that it becomes a canvas for utopian/dystopian dreams. For Hawkings, the bad thing that is AI from the get-go can become good if we submit it to a few demands. These steps disclose a kind of simplicity in our narratives around AI. It is either breakthrough in medical studies, in physics, in urban planning; and there are concerns about data and the algorithms, dangers to nations and nationality. This is the overall picture, that then spans a debate about ‘ethical AI’s’.

What does ‘ethical’ mean here? Again, it remains unclear. Trustworthy, robust, transparent, safe, explainable, etc. AI becomes a vague problem with vague answers. These discussions engage with what people mean when they say ‘ethical AI’. There is a very embedded intention of dealing with a ‘good life’ – so there is a huge value judgment in dealing with ethics. But there are a lots of philosophical discussions on frameworks. There is no sensus communis about the ethical. Most commit to a certain narrative to what society at large should value in order to live a good life.

In relation to AI, often processes become important: we want to have certain processes in certain ways as regards to technology. This makes ethics inherent to these processes, we do not need a framework for that. AI has the characteristic of bringing these value tensions and power tensions strongly out in a lot of people. For example, AI systems punish delivery drivers for paying attention to the environment (and here value is embedded deeply in AI-processing). This triggers the discussion to go the question: who is responsible for these values? The programmers, maybe, but it is not just a question of moral philosophy, also about design, business, government. This brings us towards a discussion of the future: it becomes about the consequences that should be avoided/fostered. Imaginaries are projections of the present in potential/plausible futures. The ethical/good life imaginaries are desirable; the directions not deemed the good life are less desirable. But this structure makes futures into linearities; where what is desirable might very much depend on what we start from. Social-technical imaginaries is a concept that describes actions and social structures.

Think of the ‘smart home’ – a concept ripe with overlapping imaginaries, a hedonistic personalization. Imaginaries around AI are being investigated, on national and governmental level, where AI is positioned as a massive disruptive development. What are the alternatives do these imaginaries? We do not all start from the same present – people have different experiences – and my research is trying to pinpoint where these differences lie.

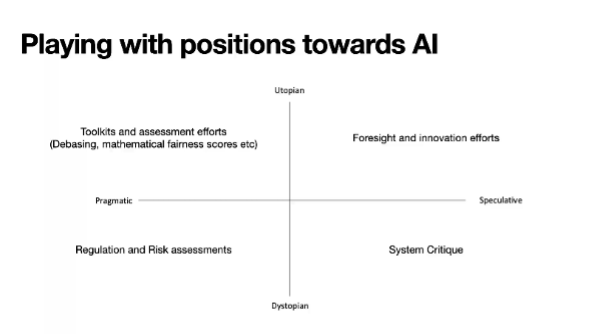

Approaches to how AI is positioned differ on their view of the future. There is the blue, beautiful Utopia – smart, automated, optimized, efficient – but on the other hand a dystopian view as the human as a cog/part of the machine. The former we find in approaches that foster innovation and foresight. AI as an unbounded potential to transform businesses and companies. And by following certain steps, we can unlock this potential. The latter, there are the dystopian/pragmatic approaches, with a focus on regulation and risk reduction, with attempts to makes AI ethical. The good potentials of AI are left for themselves, and the risks are taken up instead. Risk itself is then qualified further; there is high risk and low risk – and the question is: how can we limit harms? Fuller dystopian is a big amount of systemic criticism, that thinks that AI is inherently harmful, and that the conditions that led to AI in the first place makes that it will never be anything else than that. The structural harms are too inherent to AI to get them out of AI.

So there are a few axes of position: foresight (pragmatic/speculative) [what can AI do?] and judgment (utopia/dystopia) [AI being inherently harmful or positive]. Two axis, one from Utopian to Dystopian, one from Pragmatic to Speculative. See below:

That is where my research is at. We now go to the Miro board. But questions first.

[No questions asked.]

4. Miro board

[Not a lot of responses. “Who is in the first quarter?” Few responses. Line of questions is given, and break-out rooms are made.]

5. Break-out rooms.

Maaike: We’ll move to break-out rooms, for 15 minutes. Everybody positioned themselves. Every one clarifies there position for 1 minute.

Participant A/Participant B/Participant C are into the pragmatic/dystopian block. Participant A takes on a dystopian view: You see the risks and want to regulate. On the other hand, what kind of regulations do you then need and is this not also a problem? Participant D is in the utopian/speculative camp: AI could be helpful as a tool to be employed into creative works. Participant E found it difficult to position themselves: There is the pragmatical problem of the deeply rooted racism of AI systems, but there can be other modes of critical/creative thinking that AI could enable (a criticality/creativity approach). Participant B agrees with Participant C: it’s naïve to think we get to get rid of AI, and we might better think of new ways of engaging with AI, but then again; who’s going to regulate this?

We are divided in utopian/dystopian camps. Participant A mentions that even if it can be used creatively in different places, it does not seem to be used. But Marco makes a case for medical imagery: A tumorous cancer cell can be found earlier by AI than by physicians. Participant E brings up contextualization: If it would be contained, AI, in medical sessions, is it then still so harmful? Participant D is with Participant E: AI is not so detached and autonomous – but AI is really made by human beings; so strategical optimism also matters here.

Marco adds: There seems to be a focus in the group on the here and now, why? Participant F is a science-fiction writer, and is future-oriented.

6. Back in the general room

Sonja asks: Did any group have major disagreements? [Few responses.]

Mireia talks about how most people where comfortable in their own quarter.

Sonja: Was there any particular point that stood out?

Mireia: The ‘abolishment’ part in the fourth quarter was found to be quite controversial. Giulio shifted from 4 to 3; and asked “which are the developments that lead to AI, and who’s profiting?”

Participant G: We’d need to change that for Utopian use of AI. If that changes, than AI would still be developed, technology is not to be abolished, for it can help.

Sonja: We want to limit the harms, but not in doing so limit the potentials either.

Participant A says: Abolishment cannot be to get rid of AI all together, but we need to fundamentally rethink what it is now.

Participant D: our positions are based on our speculations, and the human-part in AI’s is not only a problem – also a potential: it means things can be changed, and that there are possibilities.

Sonja talks about her break-out group, clustered around quarter 3. They talked about intervention in a political-economical level. Participant G talks about decision-making about to what ends AI should and should not be used; and these are not only ethical questions, but also very much political questions. What would be the utopia of AI? Once perfected, AI would be superior to every other human. How do we think about that?

Participant H moved towards quarter 3, and explains why.

7. Sonja takes over again

Now that we have heard about how their positions are productive, let’s look at the example-cases on the Miro board for how these different approaches to regulating/constructing AI would be relevant for specific cases. The idea is that the conversations we had in the first round, can now be applied to a more specific question that raises practical concerns. Adaptation! Does this change approaches, do opinion shift, or do we stay with the same ideas? We’ll discuss this in the breakout rooms.

8. Break-out rooms

We read the case (on Tesla “Smart Summon” functionality).

Participant E asks critically: Why does this function even exist, is this not just merely to show off?

Participant F: quite a lot of technological developments have been made ‘just because they could be made’. Is it an ego-thing to be able to sustain the energy required for breakthrough innovation for a very long time? The motivation of the workers is not on the part of ‘the future of humanity’.

Participant E : Tesla stands for the imaginary of a particular kind of human (Elon Musk style).

Participant Fasks: Why are we still in the habit of delegating responsibility to Musk-like individuality?

Marco talks about how AI is usually individual-focused rather than responding to sociality as a web/network.

Participant A: Musk is science-fiction dystopia. What are the imaginaries going in when somebody’s making this? Data feminism matters here, and how could this ever go hand-in-hand with a company owned by a billionaire?

Participant F: responding to Tesla polemically also makes your own approach more seen/famous. The boat is rocking back and forth.

9. Wrap-up by Sonja

Marco, Mireia, could you summarize of the discussions?

Mireia: There was a huge consensus. Participant G and Participant I invited us to think of potential utopian cases where emotional recognition could be a positive thing? It could be used as an accessibility tool, but why should tech be the solution there? Though their was some utopian speculation, the pragmatic stance hailed, and we should first think about who needs what before we champion technology.

Session Closed.